Prepping Your Site for the Future of AI Search

Prepping Your Site (and all our clients sites) for the Future of AI Search

We’re automatically rolling out llms.txt on all our published sites. That means all our clients site's are now even better prepared for AI search, and you don’t need to lift a finger.

What’s llms.txt, and why should you care?

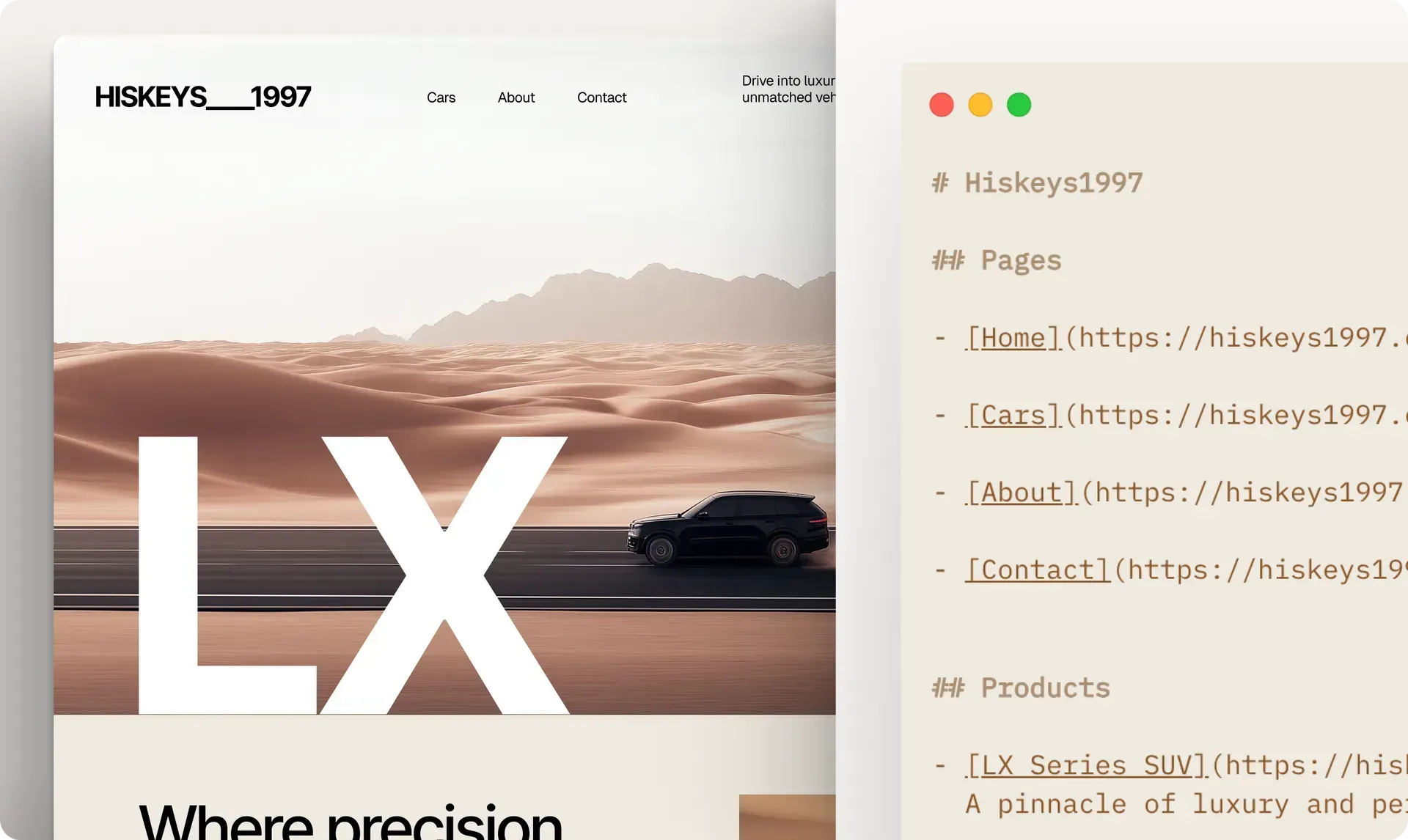

llms.txt is like a cheat sheet for AI. It's a guide for Large Language Models (LLMs), similar to how robots.txt helps search engines, but made specifically for AI. It tells tools like ChatGPT, Claude, and Perplexity exactly what your site is about in clean, structured language.

Think of it as SEO for the AI era. Not for search engines, but for large language models.

So when someone asks an AI tool about your business, it references your actual content, not some messy, out-of-context HTML.

What it does behind the scenes

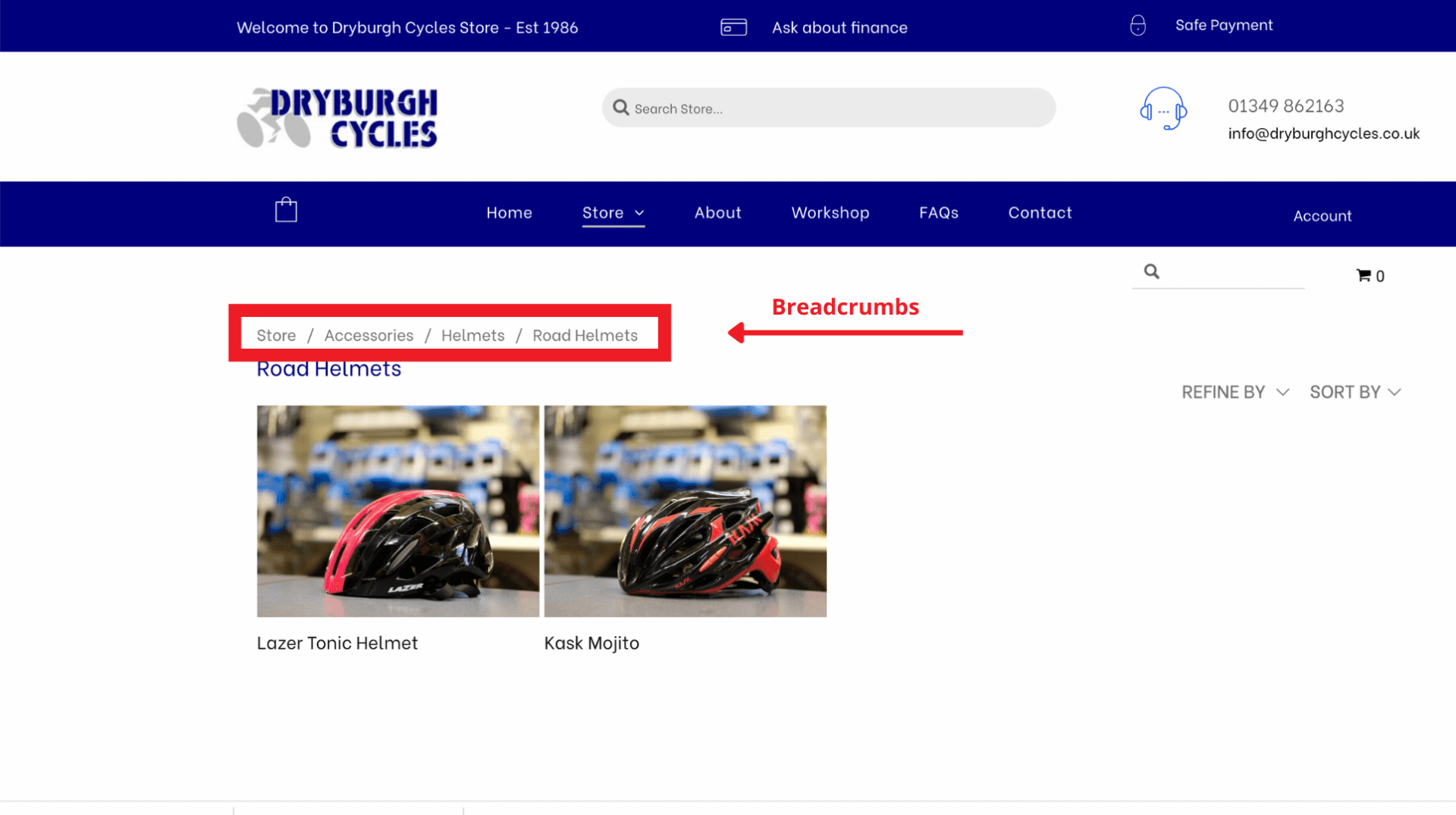

Every time you re-publish your site, our platform now auto-generates an llms.txt file that includes:

- A list of your live URLs, like blog posts, products, categories, and multi-languages

- The meta descriptions of each page (if they exist, otherwise we’ll use the meta titles)

- Only the good stuff - no drafts or no-index pages

And yes, it all happens automatically. Every single time we, or our client, publishes their website.

Why this matters

Most websites still don’t have llms.txt. But our websites do! That means that today, AI tools will be more likely to:

- Show our clients’ business accurately

- Link to their site’s real content

- Represent our client’s brand professionally

It’s a small file with a big impact on how our clients’ business shows up in the AI-powered internet.

Want to see it?

Just go to your site and add /llms.txt to the end of the URL in the address bar.

That’s your AI-friendly content map in action 😎

If you are not a client of BeOn Purpose, just go to https://www.beonpurpose.co.uk//llms.txt to view ours.